Monday night, Twitter proved yet again that despite all its shortcomings, it is still the king of where some of the best discussions and debates go down for all to see. Normally, I wouldn’t base a blog post around a twitter debate, but this specific thread was a culmination of a lot of content and discussion over the past few weeks around smart assistants, the smart home and the overall effort to understand how Voice will evolve and mature. Given who was debating and the way it dovetails so nicely from the Alexa Conference, I thought it worthy of a blog post.

Before I jump into the thread, I want to provide some context here around some of the precursors to this discussion. This really all stems from the past few CES, but mainly the most recent show. To start, here’s a really good A16z podcast by two of the prominent people in the thread, Benedict Evans and Steven Sinofsky, talking about the smart home coming out of this year’s CES and the broader implications of Voice as a platform:

As they both summarize, one of the main takeaways from CES this year was that seemingly every product is in some way tied to Voice (I wrote about this as the, “Alexification of Everything“). The question isn’t really whether we’re going to keep converting our rudimentary, “dumb” devices into internet-connected “smart” devices, but rather, “what does that look like from a user standpoint?” There are a LOT of questions that begin to emerge when you start poking into the idea of Voice as a true computing platform. For example, does this all flow through one, central interface (assistant) or multiple?

Benedict Evans followed up on the podcast by writing this piece, further refining the ideas on the above podcast, and tweeted out the article in the tweet below. He does a really good job of distilling down a lot of the high-level questions, using history as a reference, to contest the validity of Voice as a platform. He makes a lot of compelling points, which is what led to this fascinating thread of discussion.

To help understand who’s who in this thread, the people I want to point out are as follows: Benedict Evans (a16z), Steven Sinofsky (board partner @ a16z), Brian Roemmele (Voice expert for 30+ years), and Dag Kittlaus (co-founder of Siri and ViV). Needless to say, it’s pretty damn cool to be chilling at home on a Monday night in St. Louis and casually observe some of the smartest minds in this space debate the future of this technology out in the open. What a time to be alive. (I love you Twitter you dysfunctional, beautiful beast.)

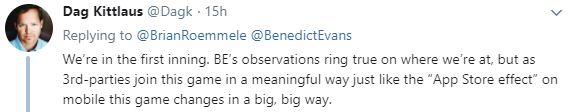

So it starts off with this exchange between Dag and Benedict:

As Dag points out, once the 3rd party ecosystem really starts to open up, we’ll start seeing a Cambrian explosion of what our smart assistants can do via network effects. Benedict, however, is alluding to the same concern that many others have brought up – people can only remember so many skills, or “invocations.” It’s not sustainable to assume that we can create 1 million skills and that users will be able to remember every single one. This guy’s response to Brian encapsulates the concern perfectly:

So what’s the way forward? Again, this all goes back to the big takeaway at the Alexa Conference, something that Brian was hammering home. It’s all about the smart assistant having deeply personalized, contextual awareness of the user:

“The correct answer or solution is the one that is correct to you.” This is the whole key to understanding what appears to be the only way we move forward in a meaningful way with #VoiceFirst. It’s all about this idea of the smart assistant using the contextual information that you provide to better serve you. We don’t need a ton of general data, each person just needs their smart assistant to be familiar with their own personal “small data.” Here Dag expands on this in his exchange with Steven:

So when we’re talking about, “deeply personalized, contextual awareness” what we’re really saying is whether the smart assistant can intelligently access and aggregate all of your disparate data together and understand the context in which you’re referring to said data. For example, incorporating your geo-location to give context to your “where” so that when you say, “book me on the first flight back home tomorrow,” your smart assistant will understand where you are currently by using your geo-location data, and where “home” is for you based on a whole different set of geo-location data that you’ve identified to your assistant as home. Factor in more elements like all of the data you save to your airline profiles, and the assistant will make sure you’re booked with all your preferences and your TSA-precheck number included. Therefore, you’re not sitting there telling the assistant to do each aspect of the total task, you’re having it accomplish the total task in one fell swoop. That is a massive reduction in friction when you subtract all the time you spend doing these types of tasks manually each day.

I don’t think we’re really talking about general AI that’s on par with Hal-9000. That’s something way more advanced and something that’s probably much further out. In order for this type of personalized, contextual awareness to be enabled, the smart assistant would really just need to be able to quickly access all of the data you have stored in disparate areas of your apps together. Therefore, APIs become essential. In the example described above, your assistant would need to be able to API into all of your apps (i.e. Southwest app where your profile is stored and Google’s app where you have indicated your “home” location) or the 3rd-party skill ecosystem whenever a request is made. Using what’s already at its disposal via API integrations from apps, in conjunction with retrieving information or functions built in skills. Therefore, the skill ecosystem is paramount to the success of the smart assistant as they serve as entirely new functions that the assistant can perform.

It’s really, really early with this technology so it’s important to temper expectations a bit. We’re not at this point of “deeply personalized, contextual awareness” just quite yet, but we’re getting closer. As a random observer of this #VoiceFirst movement, it’s pretty awesome to have your mind blown by Brian Roemmele at the Alexa Conference talking about this path forward, and then even more awesome to have the guy who co-founded Siri & ViV completely validate everything Brian said a few weeks later on Twitter. I think that as Benedict and Steven pointed out, the current path we’re on is not sustainable, but based on the learnings from Brian and Dag, it’s exciting to know that there is an alternate path ahead to keep progressing our smart assistants forward and bring this vision to life that is much more rich and intuitive for the user.

Eventually, many of us will prefer to have our smart assistants handy all the time, and what better a spot than in our little ear computers?

-Thanks for reading-

Dave

I think it’s not either but both. It needs to get Moreno contextualized but in the meantime l, skill developers need to realize these assistants don’t have these capabilities and create skills that are fun/useful anyway.

Don’t need congextualized conversation for a really fun interactive story. But then, I’m biased. 😉

Fixed the third to last paragraph…thanks for pointing that out, Katie. I really would hate for people to interpret this post as bashing skill development. I’m in the opposite camp!

This is really good. You are a really good writer.